2026 and Beyond: A CTO’s View on What’s to Come

As we move into 2026, the technology landscape is no longer defined by experimentation alone. AI has crossed the threshold from novelty to expectation, and with that shift comes a new set of responsibilities for technology leaders. The next few years will be less about chasing shiny tools and more about building durable foundations that can scale, adapt, and earn trust.

Here’s what every CTO should be thinking about as we head into 2026, and what the industry may look like by 2030.

The top priorities for CTOs in 2026

1. Metrics-first visibility in highly dynamic AI systems

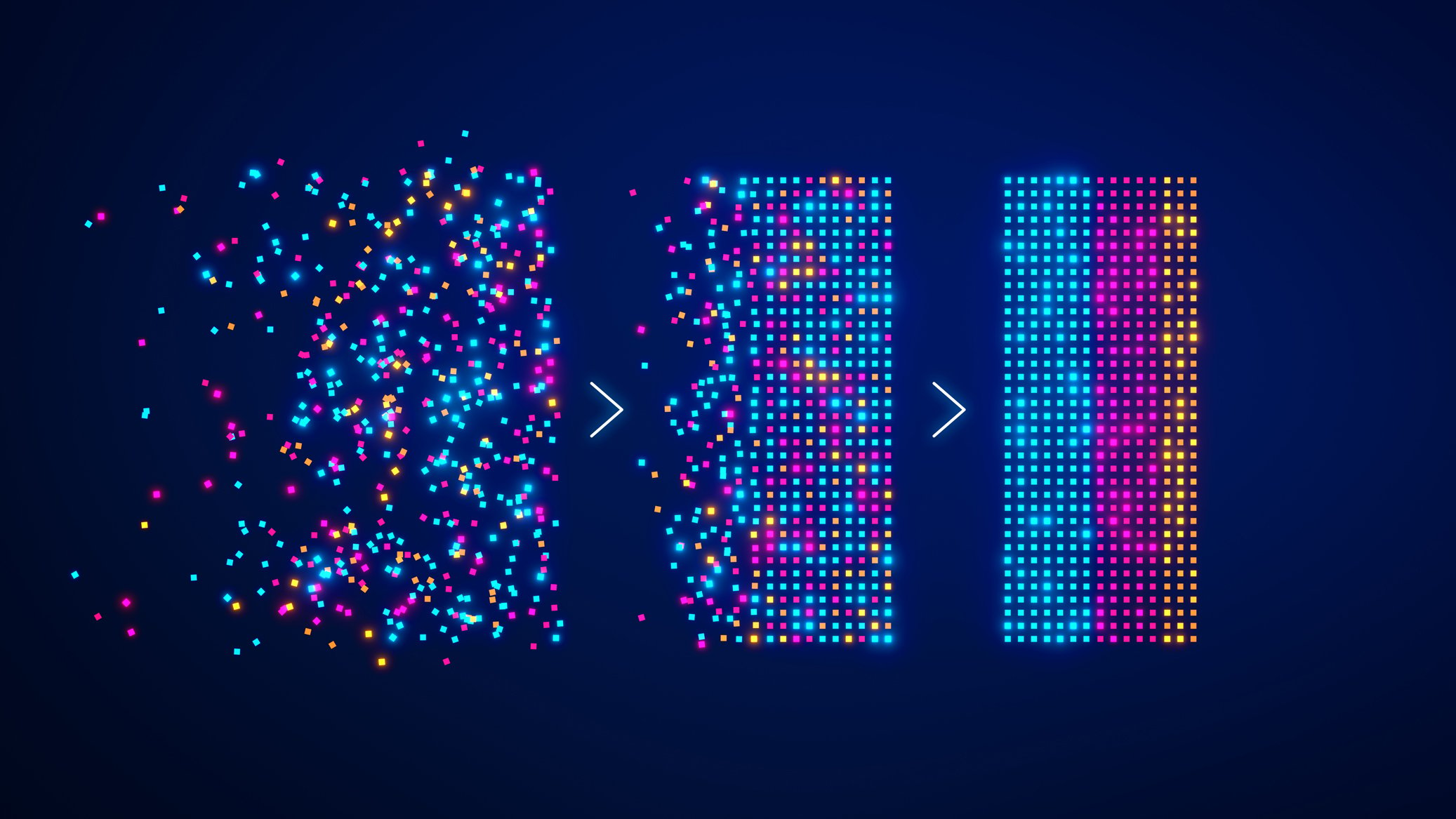

The biggest shift AI introduces isn’t just scale, it’s variability. AI driven systems behave very differently from pre-AI software outputs are probabilistic, feedback loops are continuous, and system behavior changes over time.

In this environment, success hinges on the ability to define, monitor, and evolve metrics that reflect real outcomes, not just infrastructure health. This goal extends well beyond traditional observability dashboards.

CTOs must ensure teams can answer questions like:

- Is the system behaving as intended right now?

- When it fails, can we pinpoint why quickly?

- Are we learning fast enough to improve the next iteration?

Metrics and telemetry must be designed in from day one, especially outcome-based metrics that highlight failure modes and undesired behaviors. This is what enables faster debugging, faster iteration, and continues AI innovation, rather than endless troubleshooting cycles that stall progress.

Without this foundation, teams are effectively flying blind in the most dynamic environments they’ve ever operated.

2. Owning the data plane is non-negotiable

Data strategy can no longer live in isolation – and in 2026, it increasingly manifests as control over the data plane.

As AI systems move horizontally across the enterprise, organizations must retain ownership of how data flows, is transformed, and is observed across teams and tools. Beyond analytics or storage, the focus shifts to control, trust, and adaptability.

Generative AI introduces new challenges here. Unlike traditional software, a GenAI observability data plane doesn’t just capture system logs or metrics – it must handle session data, model inputs and outputs, embeddings, and potentially sensitive user information. Tracking these interactions isn’t straightforward: sessions are probabilistic, multi-step, and sometimes ephemeral. Effective debugging, auditing, and compliance require new approaches to collect, store, and replay AI interactions safely and reliably.

Owning your own data plane allows organizations to:

- Maintain visibility across AI-driven workflows, even when behavior varies dynamically

- Avoid vendor lock-in as architectures evolve

- Ensure data compliance and observability move together

3. A deeper commitment to faster debugging of AI failures

AI adoption accelerates, failures become inevitable – but prolonged failure is optional.

Organizations need a far stronger commitment to rapidly diagnosing and correcting malfunctioning AI systems. This means investing in telemetry, metrics, and workflows that help teams understand not just that something broke, but why it broke and how to fix it quickly.

In AI systems, “mostly working” is often the most dangerous state. Subtle failures can persist unnoticed, eroding trust long before alarms go off. CTOs must ensure their teams can detect these issues early and respond decisively.

Looking ahead to 2030

By 2030, new performance standards will be set for many of today’s top challenges such as observability, data foundations, and AI integration. But new problems will continue to emerge.

Automation and scale will introduce fresh complexity. The question won’t be whether the systems can work, but how fully they can be automated without breaking trust or control. Labor will also remain constrained, increasing pressure to do more with fewer people.

At the same time, the market is likely to experience a period of disillusionment. As with every major technology shift, expectations will reset once the limits of AI-driven systems become clearer.

The next wave of AI use cases

The coming year will push beyond today’s focus on “agent AI.”

We’ll see:

- Increased emphasis on reasoning-based use cases, where systems explain why they made a decision

- Growth in multi-agent systems, particularly around coordination and behavioral dynamics

- AI systems that collaborate with each other, not just with humans, to solve more complex problems

These use cases will demand stronger guardrails, better telemetry, and far more intentional design.

How the CTO role is changing in the age of AI

The emergence of GenAI is reshaping the CTO’s role in many organizations.

In the past, developers built software and the organization consumed it. Today, people expect to actively participate in how software behaves, and they become frustrated when they can’t influence it or when outcomes don’t match expectations.

That tension doesn’t get resolved at the developer level. It lands squarely on the CTO.

The modern CTO must coach, mentor and align the organization – not just the technology. They are often the only person positioned to reset expectations, define boundaries, and bridge the gap between what AI can do and what people expect it to do.

Why telemetry matters more than ever

Telemetry is still dramatically under prioritized.

Many organizations push it to the end of the development cycle. They build a prototype, discover it’s impressively good at some things and impressively bad at others, and assume observability can fix everything later.

It can’t.

OpenTelemetry (OTel) and similar standards must be part of the foundation from the start. Without outcome-based telemetry, it’s nearly impossible to know whether a system is genuinely effective or quietly failing metrics must explicitly capture undesired outcomes, not just successes.

In AI systems, “pretty good” is often the most dangerous state to be in.

The tools we’re missing

Interestingly, many of the tools we need already exist.

OpenTelemetry, Prometheus, and Grafana aren’t new. What’s missing is ownership and the ability to deploy and operate these tools in a repeatable, integrated way. The current ad hoc approach is not remotely sustainable as AI systems scale.

Organizations must stop treating telemetry and metrics infrastructure as optional and start managing them as core, shared assets – often through open ecosystems rather than proprietary lock-in.

This leads to a broader question the industry hasn’t fully answered yet: walled gardens versus open data planes. The choices made here will shape innovation, cost, and trust over the next decade.