The Next Phase of AI Observability: From Insight to Action

AI systems don’t stay still. Models learn, data shifts, and performance changes over time, often in ways that teams don’t expect. That’s why observability has become such a critical part of building and maintaining responsible AI. It helps teams see what their systems are doing once they’re deployed, and it brings much-needed visibility to a process that can otherwise feel like a black box.

But here’s the thing. While the industry has made big strides in monitoring and evaluating AI, most tools today stop at awareness. They tell you something is wrong but not what to do about it. That gap between knowing and acting is where the next phase of observability will take shape.

Where AI Observability Stands TodayIf we look at how software observability evolved, the pattern is clear. Early monitoring tools showed what was happening, then grew smarter. They began to diagnose, respond, and even self-heal. AI systems are starting to follow that same path.

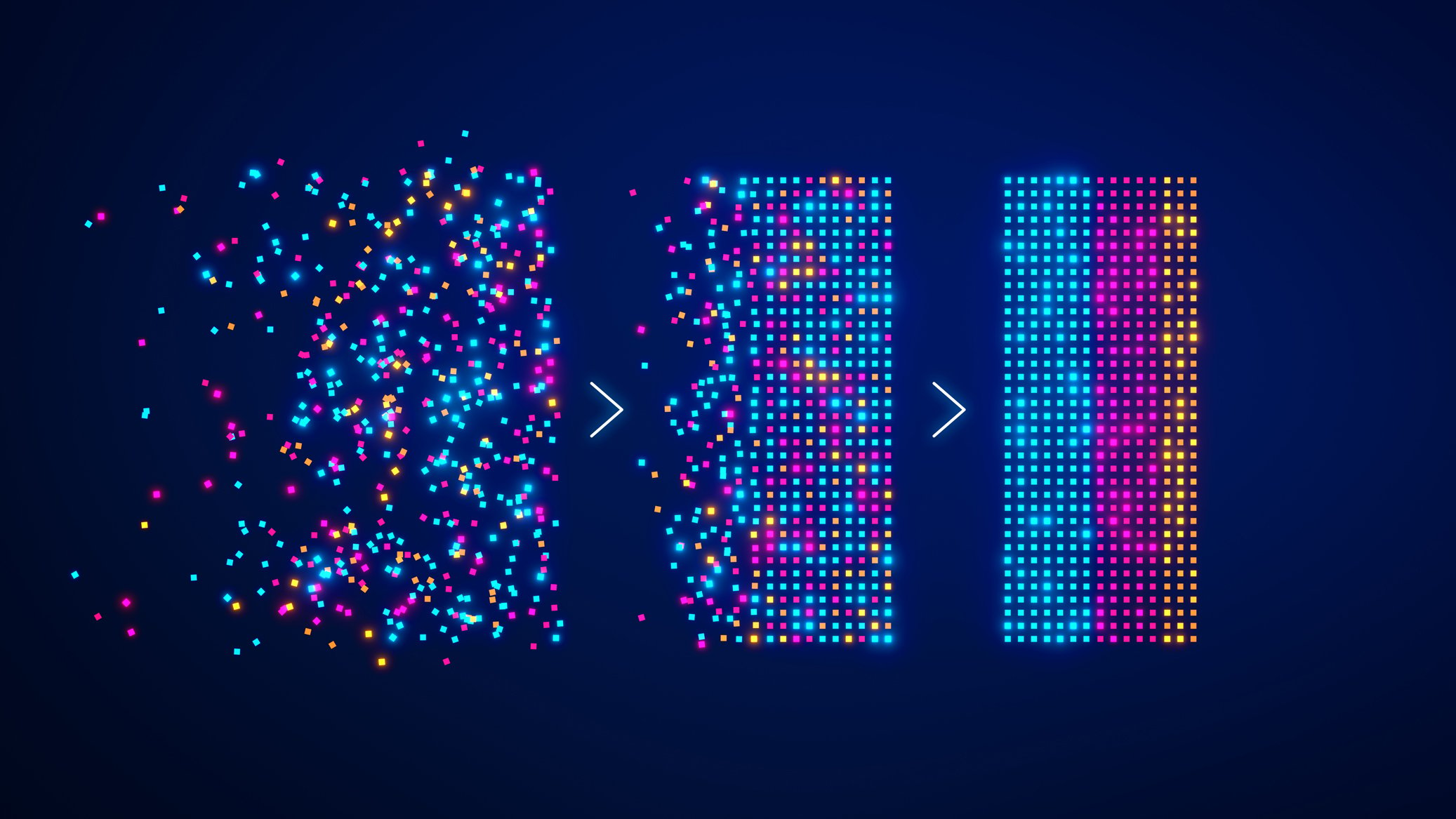

Detecting an issue is helpful, but fixing it is what keeps systems trustworthy. The next phase of observability will close that loop. It will connect insights with the ability to take action, whether that means retraining a model, correcting data issues, or automatically validating compliance before a new version is deployed.

Imagine an observability system that not only identifies a model drifting off course, but also understands why, makes the correction, and confirms the results. That’s where AI observability is headed next.

Building Continuous Governance

The future of observability is continuous governance. It’s about creating systems that don’t just surface insights, but continuously ensure accountability, transparency, and control.

That vision includes:

- Real time visibility into how AI systems perform and adapt

- Continuous evaluation against operational and ethical standards

- Automated remediation workflows that maintain both accuracy and compliance

- Complete traceability so that every change and decision can be verified

This approach transforms observability from a passive process into an active safeguard. Turning oversight into assurance.

From Insight to Action

AI observability has come a long way in helping organizations see what’s happening inside their systems. The next challenge is enabling them to act on what they find. As AI becomes more deeply integrated into business operations and regulation tightens around its use, awareness alone isn’t enough.

The next generation of observability tools will close the loop. They’ll not only detect and evaluate problems but also take meaningful steps to resolve them. That shift from insight to action will define the next era of reliable and responsible AI.

At Prove AI, we believe that connecting observability with intelligence action is how organizations will finally achieve true trust in their AI systems.