As AI systems become more embedded in critical industries, ensuring they function reliably and ethically is a growing priority. However, terms like AI Safety, AI Governance, and Responsible AI are often used interchangeably, despite their distinct meanings. Understanding the differences is essential for organizations looking to build trustworthy AI systems in North America, where standards such as ISO 42001 provide guidance in the absence of formal regulations.

AI Safety: Mitigating Risks and Preventing Harm

AI safety focuses on reducing the risks that AI systems may pose to individuals, organizations, and society. This includes preventing unintended consequences, addressing security vulnerabilities, and ensuring AI systems operate within predefined ethical boundaries. Key concerns in AI safety include:

- Bias and Fairness - Ensuring AI does not reinforce discrimination

- Reliability - Avoiding unpredictable AI behavior

- Security - Protecting AI from attacks and manipulations

AI safety efforts often involve technical measures such as rigorous testing, adversarial training, and fail-safe mechanisms to prevent harm.

AI Governance: Establishing Oversight and Compliance

AI governance refers to the standards, policies, and frameworks (guardrails) that guide the responsible development, deployment, and monitoring of AI systems. It ensures accountability by defining roles, responsibilities, and compliance mechanisms within an organization. Core elements of AI governance include:

- Adhering to Industry Standards - Depending on your region, such as ISO 42001 in North America or the NIST AI Risk Management Framework outside North America, adherence to these guidelines ensures alignment with best practices in AI development and deployment

- Transparency and Explainability - Making AI decision-making understandable and auditable

- Data Governance - Managing the quality, security, and privacy of AI training data

Unlike AI safety, AI governance incorporates organizational processes and ethical guidelines to align AI use with business and societal interests, with a focus on standards rather than government-imposed regulations in North America.

It’s important to note the difference in internal and external AI governance.

- Internal - Focuses on the internal structures and processes that ensure AI systems align with an organization's ethical and business values. This includes setting up internal policies, establishing roles and responsibilities, and fostering accountability withinn the organization

- External - Refers to the external regulations, standards, and frameworks that organizations must comply with. These may include national and international regulations, industry standards, and certification frameworks designed to ensure AI systems meet legal, ethical, and societal expectations.

Responsible AI: Ethical and Human-Centered AI Design

Responsible AI is an overarching philosophy that ensures AI is designed and deployed in a way that benefits humanity while minimizing harm. It goes beyond risk mitigation to proactively align AI with ethical values. Responsible AI encompasses:

- Technological Safeguards and Governance - Implementing technologies that actively monitor and guide AI behavior to ensure ethical decision-making without the need for manual intervention

- Social Impact - Ensuring AI supports fairness, inclusion, and positive societal outcomes

- Sustainability - Reducing the environmental impact of AI computation

Responsible AI integrates AI safety and governance principles but places greater emphasis on ethical considerations and long-term impact.

The Need for an Integrated Approach

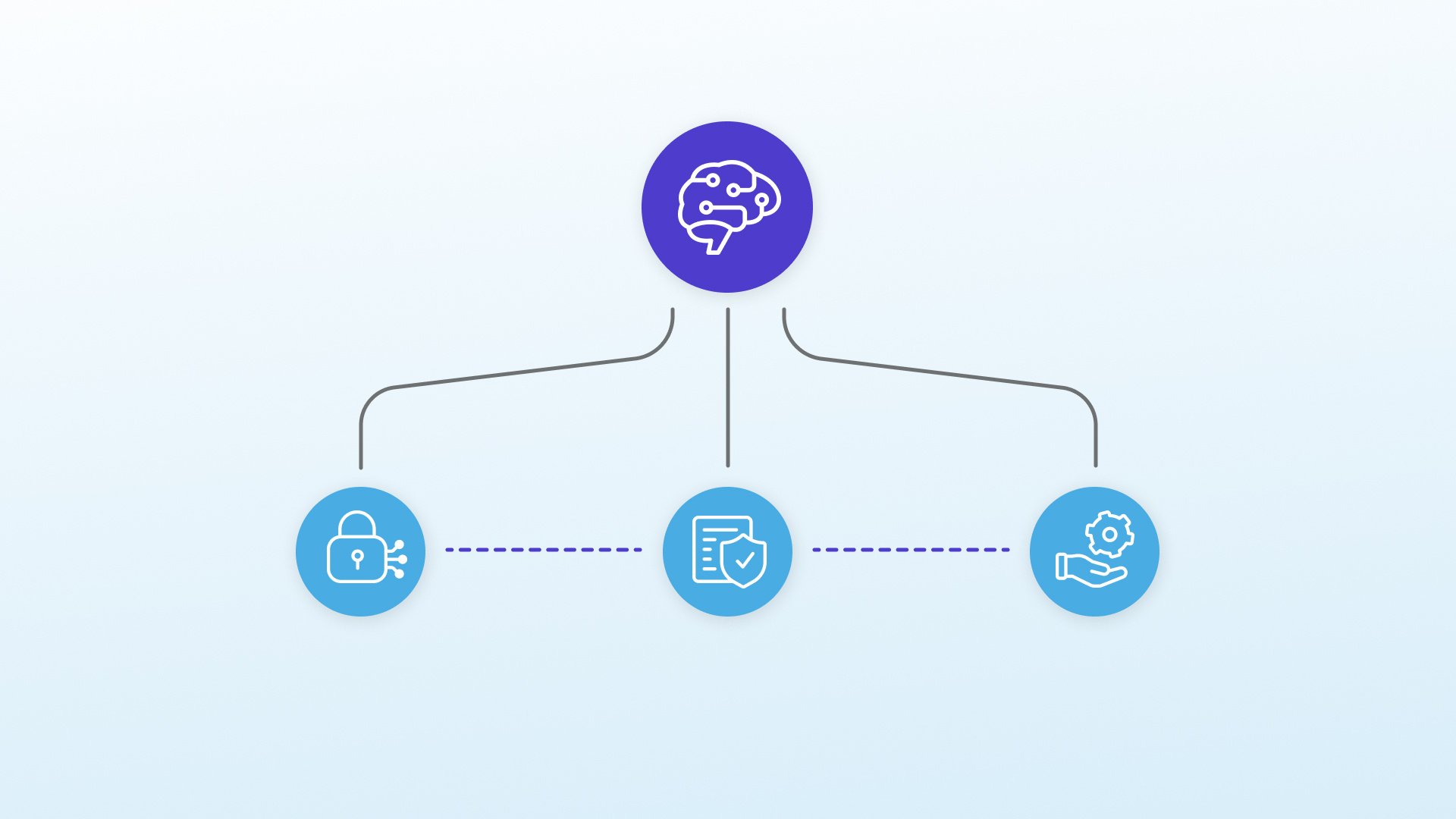

While AI safety, AI governance, and responsible AI address different aspects of AI oversight, they are interconnected. AI safety provides the technical foundation to prevent harm, AI governance ensures compliance and accountability through adherence to standards, and responsible AI frames AI development within an ethical perspective.

At Prove AI, we help organizations navigate these complexities by providing tamper-proof AI governance solutions that enhance compliance, mitigate risk, and align AI systems with best practices in AI safety and responsibility. With the focus on standards rather than regulations in the US and Canada, organizations must proactively integrate these principles to deploy AI confidently and ethically.

Are you looking to strengthen your AI oversight? Learn how Prove AI can help.