Netflix co-founder Reed Hastings recently donated $50 million to Bowdoin College to fund AI ethics research. While this is a big step for a small liberal arts school, it reflects a much larger shift: the growing realization that AI isn't just about innovation - it's about responsibility.

AI Ethics: A Good Start, But Not Enough

Teaching students to think critically about AI is crucial. Ethical discussions shape the way we approach fairness, bias, and societal impact. But in the real world, responsible AI isn't just about good intentions - it's about ensuring AI systems are reliable, secure, and verifiable. Without mechanisms to measure and enforce accountability, "ethical AI" remains theoretical.

From Talk to Action: The Need for AI Assurance

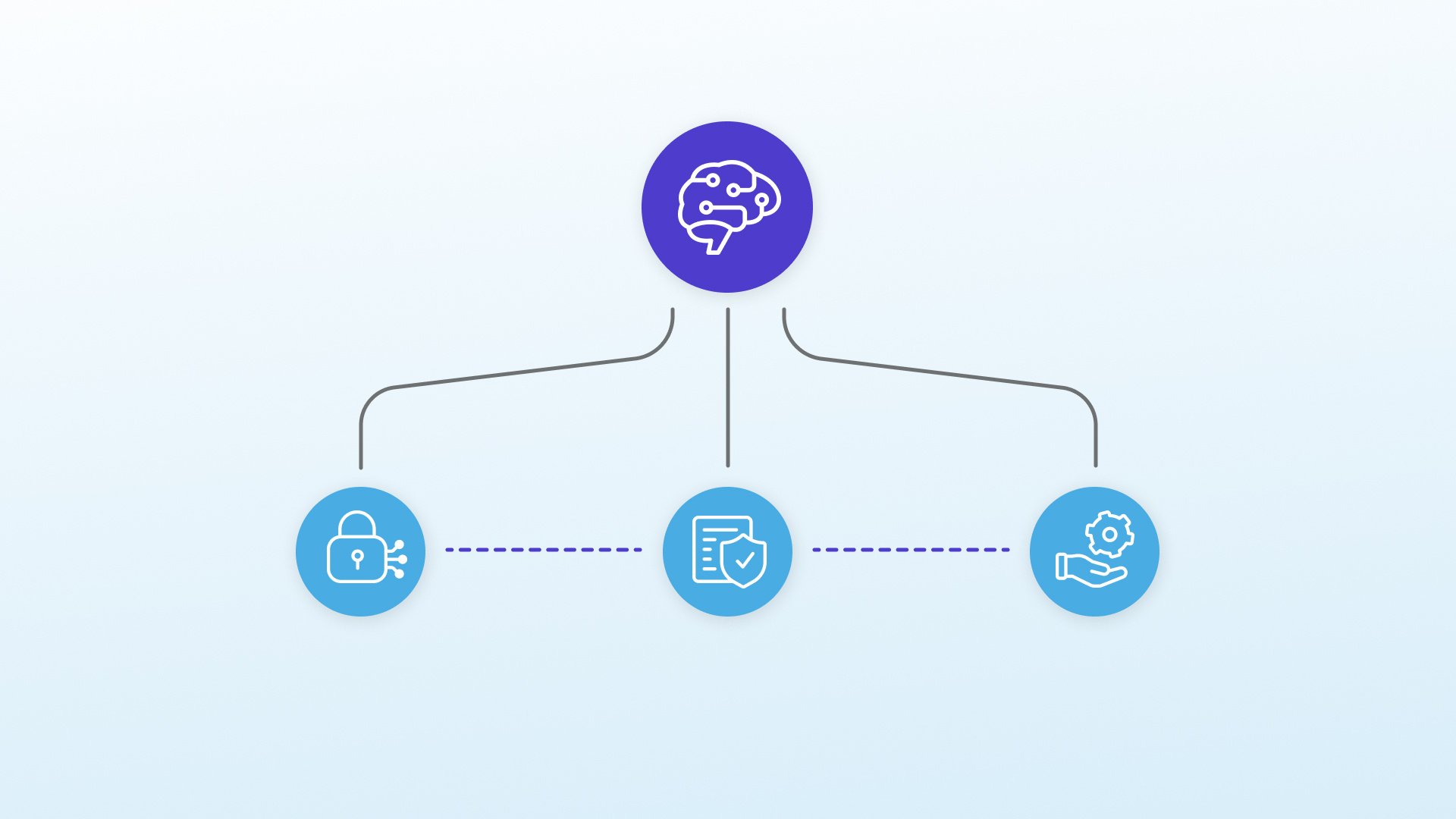

AI responsibility needs more than broad principles - it requires technical safeguards that make AI performance and decision-making transparent and auditable. Standards for security, bias detection, and reliability help ensure AI is provable, trackable, and resistant to manipulation.

By using technologies like distributed ledgers and cryptographic verification, organizations can establish clear records of AI decision-making, data usage, and model performance. These tools help turn AI oversight into something concrete - ensuring systems operate as intended, without bias drift or unseen interference.

The Path Forward: Building Real Accountability

So, how do we move from discussing AI responsibility to enforcing it? It starts with adopting measurable, verifiable approaches to AI assurance - ones that make it possible to prove compliance with emerging standards and industry best practices.

Investments like Hastings' will help advance the conversation, but the next step is ensuring AI is not just ethical in theory, but accountable in practice. Because without real oversight, "ethical AI" is just talk.