How AI teams can build trust, improve collaboration and realize greater model transparency by adopting a “bottom up” approach.

Annotation and label quality sit at the heart of every AI system, providing the necessary scaffolding for machine learning. Yet, they remain one of the least transparent aspects of the AI lifecycle.

In practice, many AI teams are incorporating data with labels that may have been created years ago, by third-party vendors, under unknown conditions, or without any consistent metadata or audit history.

That lack of consistency and transparency introduces significant added risk. Diminished data quality introduces uncertainty that amplifies bias, degrades performance, and compromises trust.

This results in:

- Teams struggling to understand and debug model behavior

- Predictions that can’t be reliably traced back to source data

- Regulatory responses that are reactive, expensive, and slow

- Key stakeholders losing trust in the system.

While many AI monitoring tools focus on evaluating models after deployment, they miss the proactive opportunity: establishing trust in the source data.

Prove AI Enables Bottom Up Explainability

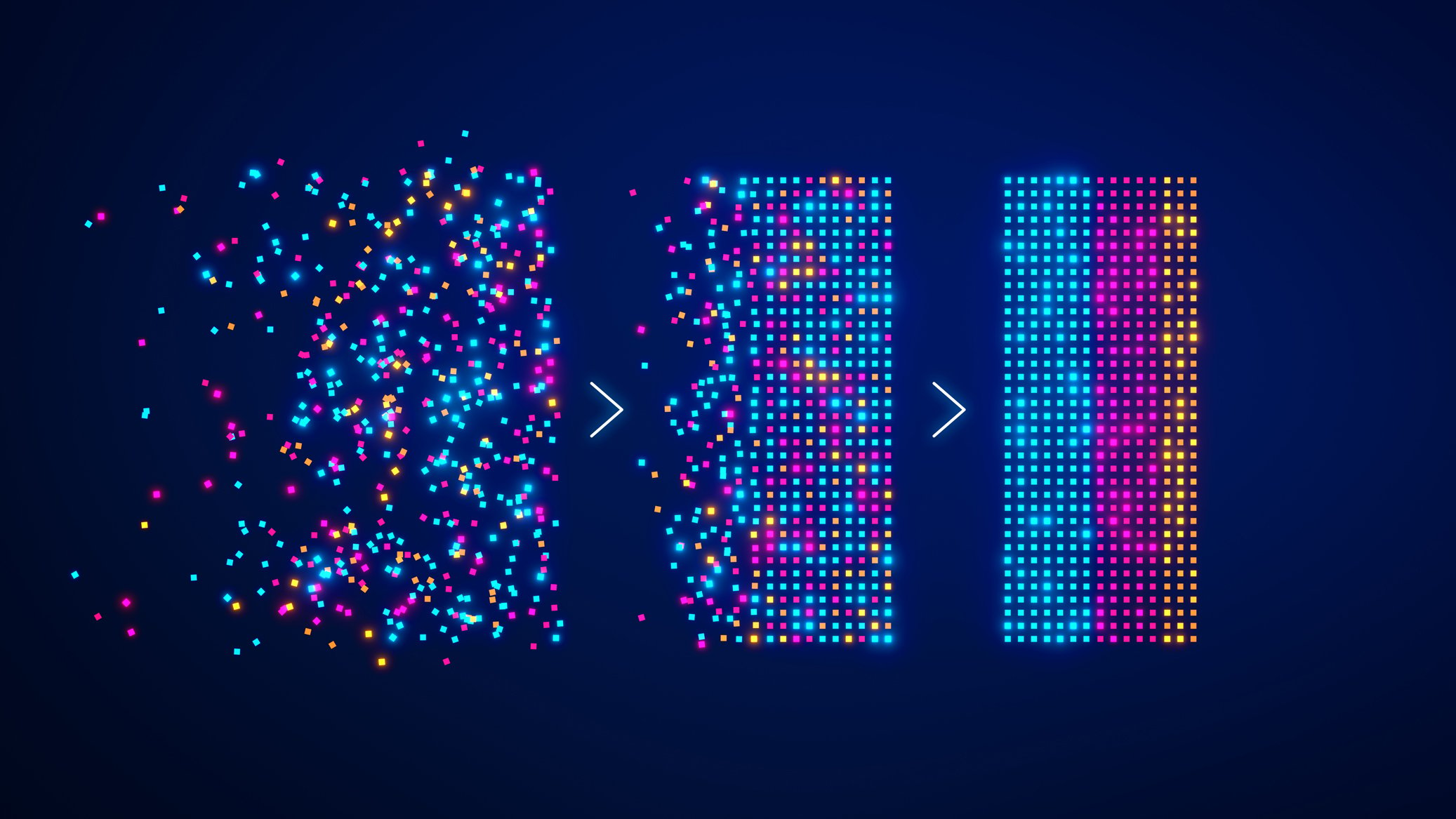

Instead of retroactively stitching together metadata from sprawling data lakes, Prove AI builds trust from the source, starting at the moment of annotation.

We do that by:

1. Logging every label from the start, including who labeled what, when, and how, automatically.

2. Making annotation decisions explainable, with full lineage and labeling context, tying model behavior back to specific training choices.

3. Strengthening collaboration and oversight,across ML Ops, data scientists, and business teams. Everyone gets a shared, real-time view of data quality and risks.

By making annotation lineage part of your AI foundation, Prove AI turns governance into a proactive, streamlined process, instead of a costly reaction.

Trustworthy AI Starts With the Data Layer

As AI becomes more embedded in high-stakes business operations, explainability is no longer optional. It’s essential for brand protection and business success. Prove AI brings transparency to the full AI lifecycle, so your teams can build trustworthy models with confidence.

Book a demo to see how Prove AI can transform your approach to data provenance, annotation, and explainability.

-2.png)