*Read the full article on Smartech Daily *Watch the podcast here

PROVE AI / BLOG

From the Prove AI Team

Engineering depth, product thinking, and field notes from building the debugging layer for GenAI pipelines.

*Read the full article on Grit Daily *Watch the podcast here

Visit our GitHub Repo: https://github.com/prove-ai/observability-pipeline

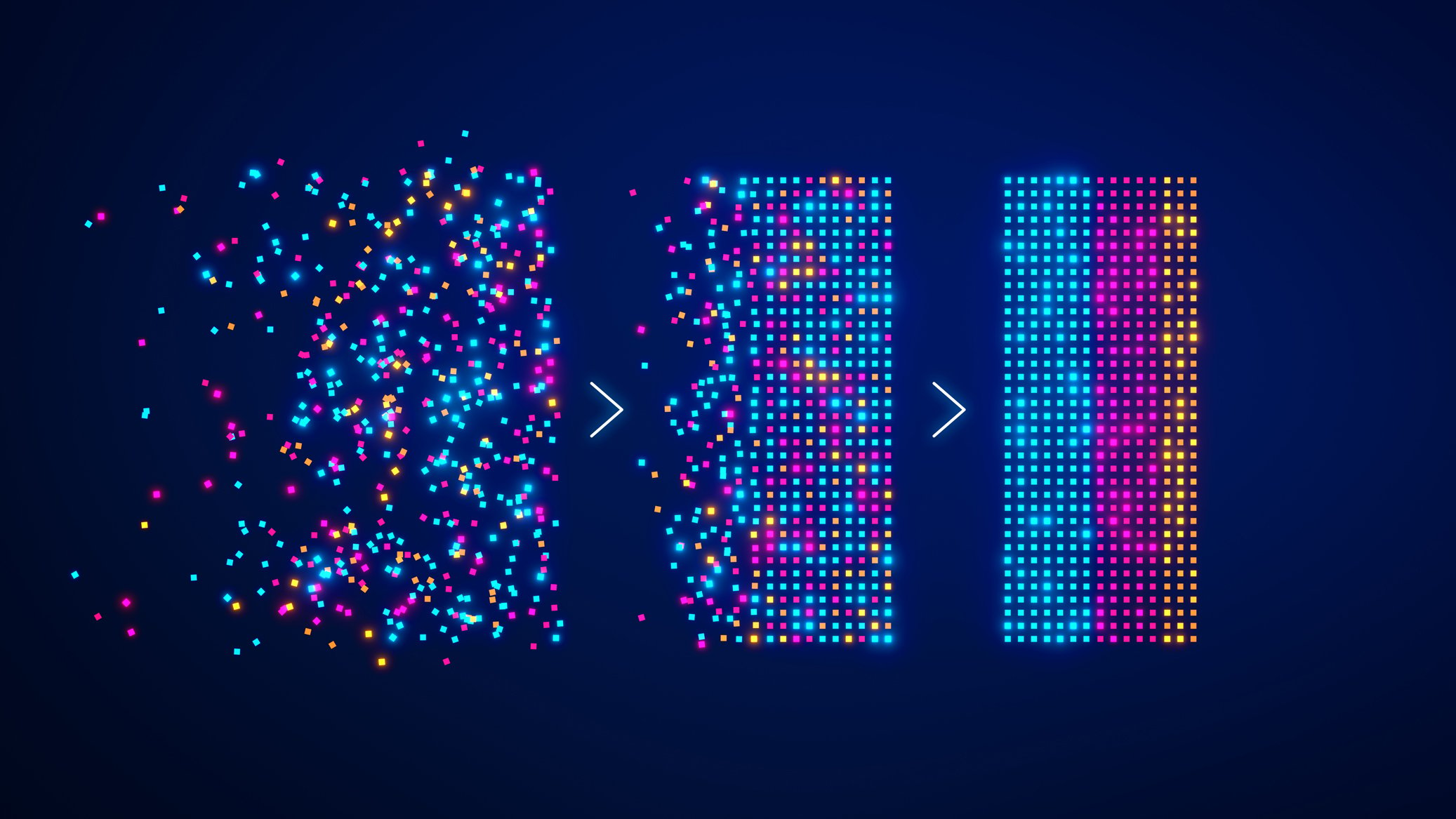

*Read the full article on Newsweek "We’ve been approaching telemetry data collection—a critical foun...

As we move into 2026, one of the biggest challenges for engineers working with AI isn't model accura...

As we move into 2026, the technology landscape is no longer defined by experimentation alone. AI has...

AI systems don’t stay still. Models learn, data shifts, and performance changes over time, often in ...